Personal Injury Attorneys

Roblox Sexual Abuse Lawsuits

Nationwide Legal Representation for Victims of Online Child Exploitation

Across the U.S., families opt to file a Roblox sexual abuse lawsuit after discovering that predators used Roblox and connected platforms like Discord to sexually exploit children. What was promoted as a popular online gaming platform became a space where adults groomed minors through private chats, games, and off-platform communication.

Predators have taken advantage of Roblox’s weak content moderation, lack of age verification, and access to minors through in-game features. In many cases, families had no idea this danger existed until their child was already exposed to sexually explicit messages or worse.

Our law firm represents families whose children were harmed by the design and failures of platforms that claimed to be safe for young users. These cases involve severe abuse, including coercion and lasting psychological harm.

If your child was targeted or abused on Roblox or Discord, we’re here to help you understand your legal rights. Our team brings deep experience in child sexual abuse litigation, with a focus on privacy, trauma-informed support, and strategic legal action.

Legal Developments in Filed Lawsuits

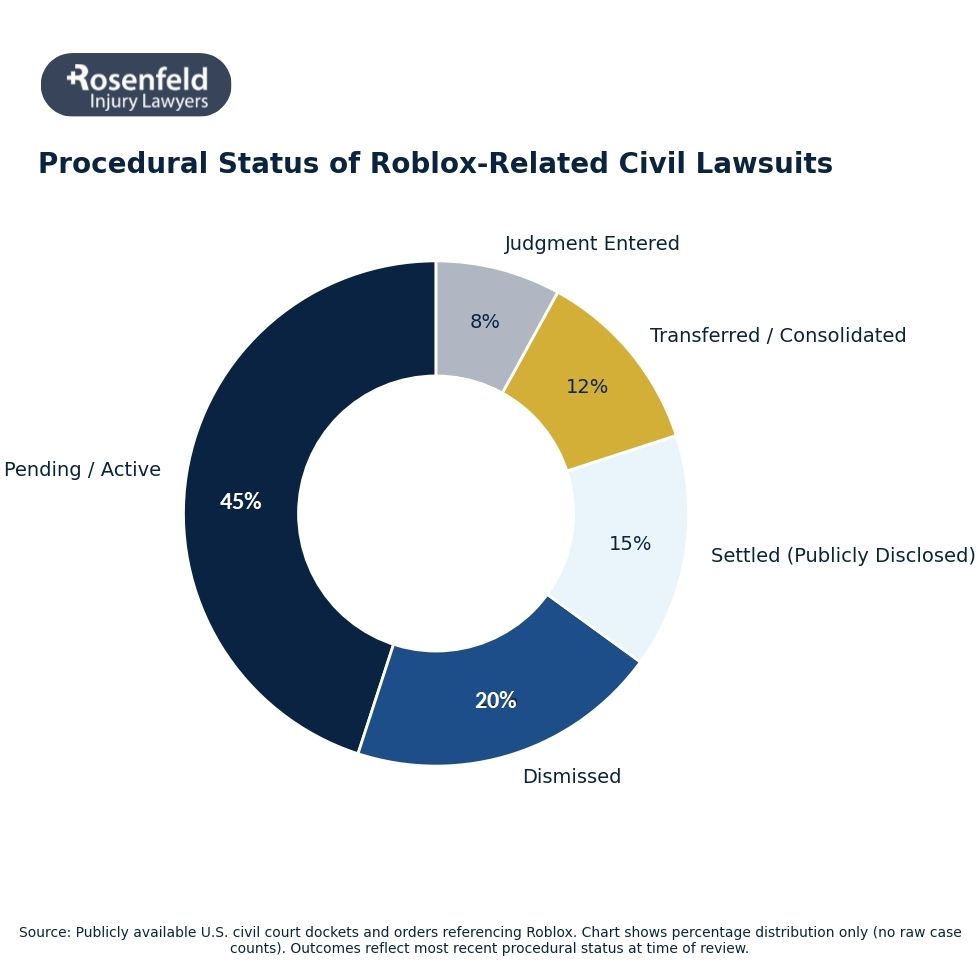

Over the past several years, a wave of Roblox lawsuits has exposed how deep and widespread the failures were in child protection on the platform. These lawsuits allege that Roblox Corporation (and in many cases, Discord Inc.) failed to prevent sexual predators from using their systems to engage in grooming, share sexually explicit content, and manipulate young users.

Below is a timeline of major legal actions, investigations, and lawsuits filed against Roblox and associated platforms for allowing sexual exploitation to take place under their watch.

September 2025 – Federal Cases Consolidated Under MDL-3166

As abuse reports and lawsuits surged, the U.S. Judicial Panel on Multidistrict Litigation consolidated dozens of federal claims into MDL-3166, focused on child sexual exploitation linked to Discord and Roblox. The federal lawsuit accuses both companies of enabling widespread abuse by failing to monitor private chats, enforce age restrictions, and moderate inappropriate content.

The MDL now allows families across the country to pursue legal claims together and share evidence of how these platforms ignored known risks.

August 2025 – Louisiana Attorney General Sues Roblox

In August 2025, Louisiana’s Attorney General filed a lawsuit against Roblox Corporation, accusing the company of failing to protect children from large-scale sexual exploitation. The complaint describes how Roblox ignored internal warnings and failed to stop known predators from using the platform to engage with minors.

According to the lawsuit, Roblox acted as a hub for child sexual abuse material by allowing adult users to send sexually explicit photos, contact underage users directly, and bypass parental controls.

August 2025 – California Lawsuit Filed by Mother of 10-Year-Old Girl

A mother in California filed a claim after discovering her daughter was sexually exploited by an adult predator who initiated contact through a Roblox account, sent inappropriate messages, and manipulated the child into sending explicit photos. The communications later moved to Discord, where the abuse escalated.

The lawsuit alleges that neither company responded to red flags, despite public claims that their platforms were safe for children.

August 2025 – Federal Charges in Child Abduction Case

Also in August, a 27-year-old man was arrested after he abducted a child he met through Roblox. The predator used Discord to continue contact, locate the child’s home, and drive her across state lines. Federal authorities intervened, and the young girl was recovered.

A federal lawsuit filed in connection with this case claims that both platforms failed to implement any effective restrictions to prevent this from happening.

August 2025 – Georgia Family Sues Over Predatory Messaging

A 9-year-old in Georgia was targeted by multiple predators who posed as peers and began sending sexually explicit images and coercive messages through Roblox. When the child tried to end the conversation, the predators threatened to release her photos publicly.

The lawsuit filed by the family points to Roblox’s inadequate safety tools, claiming the company failed to block or remove adult accounts posing as children.

July 2025 – Cross-State Grooming and Travel Attempts

Two separate lawsuits were filed in July after predators used Roblox to groom teenage girls and attempted to meet them in person. In both cases, initial contact was made through the gaming platform, then transitioned to private Discord channels.

These cases add to the growing number of complaints that Roblox’s platform failures allowed abusers to operate freely, leaving vulnerable users exposed to real-world dangers.

May 2025 – Arrest of Christian Scribben and Related Lawsuits

Federal authorities arrested Christian Scribben in May 2025 for orchestrating a large-scale grooming scheme involving Roblox and Discord. He targeted children as young as eight, coercing them into sending sexually explicit photos and videos that were later distributed on Discord.

Multiple Roblox sexual abuse lawsuits claim the platform ignored warnings, user complaints, and signs of grooming activity connected to Scribben’s account.

May 2025 – 13-Year-Old Assaulted After Grooming on Roblox and Discord

In Texas, a lawsuit against Roblox was filed after a 13-year-old girl was groomed and later assaulted by a man who used Discord and Roblox to contact her and locate her home. Other lawsuits filed in California and Georgia that month also described minors being manipulated into producing child sexual abuse content.

One case involved a 16-year-old girl who was trafficked across state lines and sexually assaulted, with the predator using both platforms to facilitate contact.

April 2025 – Multiple Lawsuits Allege Platform Negligence

Two lawsuits in April 2025 highlighted repeat issues across both platforms. In one case, an adult man exploited over 25 minors using the Roblox platform, encouraging them to share inappropriate images through game-based interactions.

These lawsuits claim Roblox failed to verify user ages or monitor interactions, even after repeated safety alerts and user complaints.

2024 – Developer Exploits Victim Using Roblox Access

A nationwide investigation uncovered that Arnold Castillo, a Roblox game developer, used his position to groom and traffic a 15-year-old girl. During her abduction, Castillo referred to her as a “sex slave,” and she endured repeated sexual abuse over eight days.

This investigation also uncovered a registered sex offender in Kansas who contacted an 8-year-old through Roblox, and an 11-year-old New Jersey girl was abducted after engaging with an adult on the platform.

October 2022 – Class Action Lawsuit Names Roblox Corporation

A class action lawsuit was filed in California against Roblox Corporation, Discord Inc., and other tech companies for enabling child exploitation through interconnected social media platforms. According to the complaint, the companies failed to implement effective safety measures or limit adult contact with children, allowing abuse to occur across multiple apps.

2019 – Early Lawsuit Highlights the Dangers of Online Platforms

As early as 2019, a Roblox sexual abuse lawsuit revealed the platform’s vulnerability. A California mother found her son had been groomed and coerced into sending explicit photos to an adult predator. The case was one of the first warnings about how online platforms can be used by abusers.

What Are the Biggest Child Safety Concerns Tied to Roblox?

As one of the most widely used online gaming platforms among children and teens, Roblox has come under intense legal and public scrutiny for putting countless young users at risk of grooming, coercion, and sexual exploitation.

Families across the country have described devastating experiences involving emotional and psychological harm, online manipulation, and in some cases, physical abuse and trafficking. The lawsuits claim that the platform failed to take even basic steps to protect children from abuse.

Below are five key failures repeatedly cited in legal filings and public investigations.

Open Chat Features That Invite Abuse

The chat and messaging tools built into the Roblox platform have been at the center of many lawsuits involving child sexual exploitation. Instead of creating a safe, limited communication system for children, Roblox allowed direct messaging between users of all ages, including adults posing as kids.

Predators used these features to gain a child’s trust, share sexually explicit content, and eventually move conversations off-platform to apps like Discord, where grooming and abuse could escalate unchecked. According to the lawsuit, Roblox ignored repeated warnings about this risk and failed to provide safeguards that would have blocked inappropriate communication before harm occurred.

Sexual Content Embedded in User-Created Games

While the ability to create and share games is a major part of Roblox’s appeal, it has also become a tool for abuse. Several Roblox lawsuits highlight games that included inappropriate avatars and simulations of sexual behavior, many of which remained available to children for months despite user complaints.

In some cases, adult users used these games to lure children into sharing explicit photos or to initiate sexually abusive conversations under the guise of gameplay. Critics argue that Roblox did not enforce its content rules and failed to remove inappropriate material in a timely manner, creating an unsafe environment where exploited children were left unprotected.

Lack of Age Verification and Identity Checks

One of the most frequent criticisms in both legal filings and media reports is Roblox’s lack of effective age verification. The platform relies largely on self-reported birthdates, which allows adult predators to create accounts as children and communicate freely with real minors.

Several filed lawsuits allege that this loophole made it easy for abusers to target vulnerable users, solicit inappropriate images, and encourage minors to participate in illegal activity. Attorneys argue that parental consent and stricter controls should have been mandatory from the beginning. Roblox’s failure to adopt these basic protections has contributed to widespread abuse and trauma.

Delayed and Inconsistent Moderation

Parents and victims involved in Roblox sexual abuse lawsuits consistently report long delays in removing harmful content or blocking accused predators, even after multiple reports. Offensive usernames and inappropriate games often remained live for days or weeks, giving predators more time to cause harm.

In many cases, Roblox’s moderation tools failed to detect or respond to clear violations of its own policies. Lawsuits claim Roblox put profits and engagement ahead of platform safety by allowing this inappropriate material to spread. The result has led to significant emotional distress and a breakdown of trust between families and the platform.

Encouragement of Off-Platform Contact

A major safety concern raised in nearly every Roblox lawsuit filed is the company’s role in facilitating off-platform communication. Many games and user experiences within Roblox prompt players to join external chats, often through Discord. This hand-off from the game to a messaging app creates a gap in monitoring, where sexual abuse and coercion escalate.

Several lawsuits argue that Roblox’s design encourages this behavior–intentionally or not–by failing to block links or restrict adults from inviting minors to private platforms. Once there, children are more easily manipulated into sharing inappropriate content, including child sexual abuse material, and are subjected to ongoing threats and abuse.

Legal Grounds for Roblox Sexual Abuse Lawsuits

Families filing lawsuits allege that Roblox Corporation failed to meet its legal duty to protect children using its platform. These cases argue that abuse was foreseeable, preventable, and allowed to continue because the company ignored known risks tied to predatory behavior, weak safety tools, and inadequate oversight of adults interacting with minors.

Negligence and Corporate Misconduct

A core allegation in nearly every Roblox lawsuit filed is that the company failed to respond to repeated warnings that sexual predators were using the platform to target young users. Lawsuits claim Roblox had access to internal reports, moderation data, and user complaints showing patterns of grooming, coercion, and sexual abuse.

Despite this knowledge, plaintiffs argue Roblox continued to allow unsafe chat features, did not require age verification, and failed to remove accused predators in a timely manner. As a result, countless children were left sexually exploited, suffering emotional and psychological harm.

Defective Platform Design and Safety Failures

Many Roblox sexual abuse lawsuits focus on the platform’s design itself. Plaintiffs allege that Roblox’s interactive features (such as private messaging, open social systems, and user-generated games) were built without adequate safeguards for minors.

According to the lawsuits, predators used these features to send sexually explicit messages, request explicit photos, and engage in sharing explicit photos with children. The failure to implement effective safety measures turned Roblox into an unsafe environment for younger children.

Distribution of Sexually Explicit and Harmful Material

Several lawsuits allege that Roblox allowed the circulation of sexually explicit images and harmful material, including child sexual abuse content, through chats and in‑game interactions. Parents report discovering that their children were pressured into sending explicit photos in exchange for attention, in‑game rewards, or Roblox’s in‑game currency.

According to the lawsuit filings, Roblox did not adequately monitor or block this content, even after receiving user reports. Plaintiffs argue this failure directly contributed to emotional trauma, sexual exploitation, and, in some cases, physical harm.

Failing to Protect Children by Violating Federal Laws

Attorneys representing families assert that Roblox Corporation violated federal child protection laws, including the Child Online Privacy Protection Act (COPPA) and the Trafficking Victims Protection Act (TVPA). These claims focus on Roblox’s failure to obtain and verify parental consent, restrict adult‑minor communication, or use available tools such as facial age estimation.

A number of lawsuits claim that Roblox’s practices enabled child sexual exploitation by allowing adult predators to operate freely on a platform marketed to children. Plaintiffs argue these violations support civil liability for sexual abuse, trafficking, and exploitation.

Deceptive Marketing and Consumer Fraud

Another legal theory cited in many Roblox lawsuits involves consumer fraud. Parents claim that Roblox marketed itself as a safe, moderated gaming platform for children while concealing the extent of explicit content, grooming risks, and predatory behavior occurring behind the scenes.

According to the lawsuits, these misleading assurances persuaded families to trust Roblox with their children’s safety, exposing them to an environment where sexual abuse and exploitation were allowed to continue unchecked.

Failure to Warn, Intervene, or Remove Predators

Families also allege that Roblox failed to warn parents about known dangers or intervene when abuse was reported. Many lawsuits describe repeated reports of inappropriate messages, explicit photos, and suspicious accounts, only for those users to remain active.

By failing to act, plaintiffs argue Roblox enabled ongoing exploitation and compounded the emotional distress suffered by sexually abused children. These failures are central to claims of negligence and child endangerment.

Legal Limits of Section 230 Immunity

Roblox often points to Section 230 of the Communications Decency Act as a defense. However, plaintiffs in Roblox lawsuits argue that this protection does not apply where a company actively designs and monetizes systems that enable harm.

The lawsuits claim that Roblox’s use of virtual currency, social incentives, and engagement‑driven features encouraged unsafe interactions and exploitation. Because Roblox went beyond passive hosting, families argue the company can be held liable through civil legal action.

Who Can File a Lawsuit Against Roblox?

Families may be eligible to file a Roblox sexual abuse lawsuit if a child was groomed, coerced, or exposed to inappropriate content through the Roblox platform or connected apps like Discord.

You may qualify to file a lawsuit against Roblox if:

- A child in your care was groomed, coerced, or sexually exploited by an adult through their account or in-game chat

- Your child received explicit messages or was exposed to child sexual abuse material while using Roblox or communicating through the messaging app Discord

- Reports of suspicious behavior, predatory behavior, or sending explicit images were made but ignored or mishandled by the platform

- Your family experienced emotional distress, financial harm, or physical harm connected to abuse that occurred through the Roblox or Discord platforms

Parents, legal guardians, or court-appointed representatives can file on behalf of a minor. In some cases, survivors who were sexually abused through Roblox as children may file a lawsuit independently once they reach adulthood.

What Damages Can Victims Recover in a Roblox Settlement?

Sexual exploitation victims may be entitled to seek financial compensation through a Roblox sexual abuse lawsuit. These claims focus on the wide-ranging harm caused by predatory behavior, including the emotional, psychological, and financial toll that abuse can take on both children and their families.

Damages in a lawsuit against Roblox may include:

- Costs of medical care and mental health treatment related to emotional trauma, anxiety, PTSD, and long-term psychological harm

- Lost income or reduced work capacity for parents who needed to leave jobs or reduce hours to care for a child in crisis

- Educational disruption or setbacks for children struggling with the impact of abuse and ongoing recovery

- Emotional distress and reduced quality of life caused by exposure to inappropriate content, coercion, and abuse by adult predators

In cases involving corporate misconduct, such as knowingly allowing abuse to continue or failing to act on platform safety warnings, punitive damages may be awarded to punish the company for reckless or grossly negligent behavior.

How Our Roblox Lawsuit Lawyers Can Help You Take Legal Action

If your child was harmed through Roblox or related platforms like Discord, our law firm is here to help you take meaningful legal action. We represent families across the country in Roblox sexual abuse lawsuits, working to hold companies accountable when they fail to protect children from abuse, manipulation, and exposure to explicit content.

Our sexual abuse lawyers understand how overwhelming these situations can be. Many parents trusted Roblox as a safe platform, only to discover that their child had been targeted by a sexual predator, sometimes without their knowledge until after serious harm had occurred.

Our team is experienced in complex child sexual abuse and tech platform liability cases. We work with digital forensics professionals, child development experts, and survivors’ advocates to build strong claims for financial compensation and platform reform.

We investigate the following in each case:

- User communication logs and chat records from the Roblox account

- Content moderation failures and delayed response to abuse reports

- Whether the platform failed to remove explicit content or known predators

- Use of Roblox’s in-game currency in grooming or coercion attempts

- Platform design features that encouraged off-platform contact or unsafe interactions

We focus not only on proving how the abuse occurred, but on connecting it directly to failures in Roblox’s systems, policies, and decision-making. Our experienced personal injury lawyers prepare every case for the possibility of trial, while also staying engaged with potential settlements as the litigation progresses.

Whether your child was coerced into sharing sexually explicit images, lured into a Discord chatroom by an adult predator, or exposed to inappropriate material within a Roblox game, we are prepared to act.

We approach each case with discretion, urgency, and a trauma-informed strategy designed to protect the privacy of your family while aggressively pursuing justice through the courts.

Schedule a Confidential Case Evaluation

Our firm offers a free consultation for families impacted by abuse on Roblox or Discord. We will listen to your story, answer your questions, and explain your legal rights in plain terms.

Whether your case involves grooming, the sharing of explicit messages, or the distribution of child abuse material, our sexual abuse lawyers are here to support you with honest legal advice and experienced representation. We know how hard it is to talk about these issues, but no family should go through it alone.

There is no cost to speak with us, and no obligation to move forward. If you choose to file a claim, we handle Roblox lawsuits on a contingency fee basis; you pay nothing unless we secure financial compensation for your family.

Contact us today to schedule your confidential case evaluation. Let’s discuss your case, explain what your rights are, and how we may be able to help you hold Roblox accountable for the harm your child experienced.